One Qualitative Predictor

Qualitative Predictors

The simplest situation where dummy variables might be used in a regression model is when the qualitative predictor has only two levels. The regression model for a single quantitative predictor \((x_1)\) and a dummy variable \((D_1)\) is written

\[\begin{equation} Y = \beta_0 + \beta_1 x_1 + \beta_2 D_1 + \beta_3 x_1 D_1 + \varepsilon \end{equation}\] where \[\begin{equation*} D_1 = \begin{cases} 0 &\text{for the first level}\\ 1 &\text{for the second level.} \end{cases} \end{equation*}\]

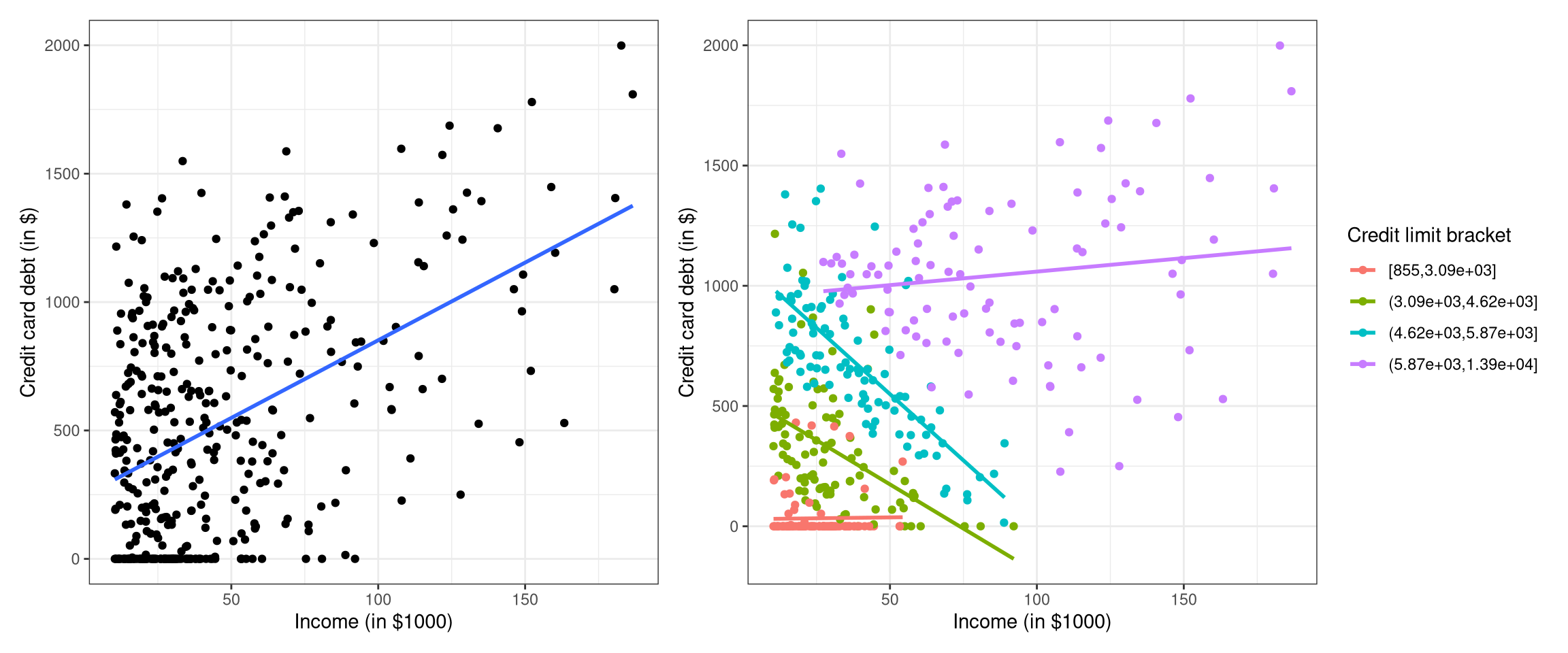

The model above when \(D_1\) has two levels will yield one of four possible scenarios, as shown in Figure 1. This type of model requires the user to answer three basic questions:

- Are the lines the same?

- Are the slopes the same?

- Are the intercepts the same?

To address whether the lines are the same, the null hypothesis \(H_0: \beta_2 = \beta_3 = 0\) must be tested. One way to perform the test is to use the general linear test statistic based on the full model and the reduced model \(Y = \beta_0 + \beta_1 x_1 + \varepsilon\). If the null hypothesis is not rejected, the interpretation is that there is one line present (the intercept and the slope are the same for both levels of the dummy variable). This is the case for graph I of Figure 1. If the null hypothesis is rejected, either the slopes, the intercepts, or possibly both the slope and the intercept are different for the different levels of the dummy variable, as seen in graphs II, III, and IV of Figure 1, respectively.

To answer whether the slopes are the same, the null hypothesis \(H_0: \beta_3 = 0\) must be tested. If the null hypothesis is not rejected, the two lines have the same slope, but different intercepts, as shown in graph II of Figure 1. The two parallel lines that result when \(\beta_3 = 0\) are

\[\begin{equation*} Y = \beta_0 + \beta_1 x_1 + \varepsilon \text{ for } (D_1 = 0) \quad\text{and}\quad Y = (\beta_0 +\beta_2) + \beta_1 x_1 + \varepsilon \text{ for } (D_1 = 1). \end{equation*}\]When \(H_0: \beta_3=0\) is rejected, one concludes that the two fitted lines are not parallel as in graphs III and IV of Figure 1.

To answer whether the intercepts are the same, the null hypothesis \(H_0: \beta_2 = 0\) for the full model must be tested. The reduced model for this test is \(Y = \beta_0 + \beta_1 x_1 + \beta_3 x_1 D_1 + \varepsilon\). If the null hypothesis is not rejected, the two fitted lines have the same intercept but different slopes:

\[\begin{equation*} Y = \beta_0 + \beta_1 x_1 + \varepsilon \text{ for } (D_1 = 0) \quad\text{and}\quad Y = \beta_0 + (\beta_1 +\beta_3) x_1 + \varepsilon \text{ for } (D_1 = 1). \end{equation*}\]Graph III of Figure 1 represents this situation. If the null hypothesis is rejected, one concludes that the two lines have different intercepts, as in graphs II and IV of Figure 1.

Example

Suppose a realtor wants to model the appraised price of an apartment as a function of the predictors living area (in m\(^2\)) and the presence or absence of elevators. Consider the data frameVIT2005, which contains data about apartments in Vitoria, Spain, including totalprice, area, and elevator, which are the appraised apartment value in Euros, living space in square meters, and the absence or presence of at least one elevator in the building, respectively. The realtor first wants to know if there is any relationship between appraised price \((Y)\) and living area \((x_1)\). Next, the realtor wants to know how adding a dummy variable for whether or not an elevator is present changes the relationship: Are the lines the same? Are the slopes the same? Are the intercepts the same?

Solution (is there a realationship between totalprice and area?):

A linear regression model of the form

\[\begin{equation} Y= \beta_0 +\beta_1 x_1 + \varepsilon \end{equation}\]is fit yielding

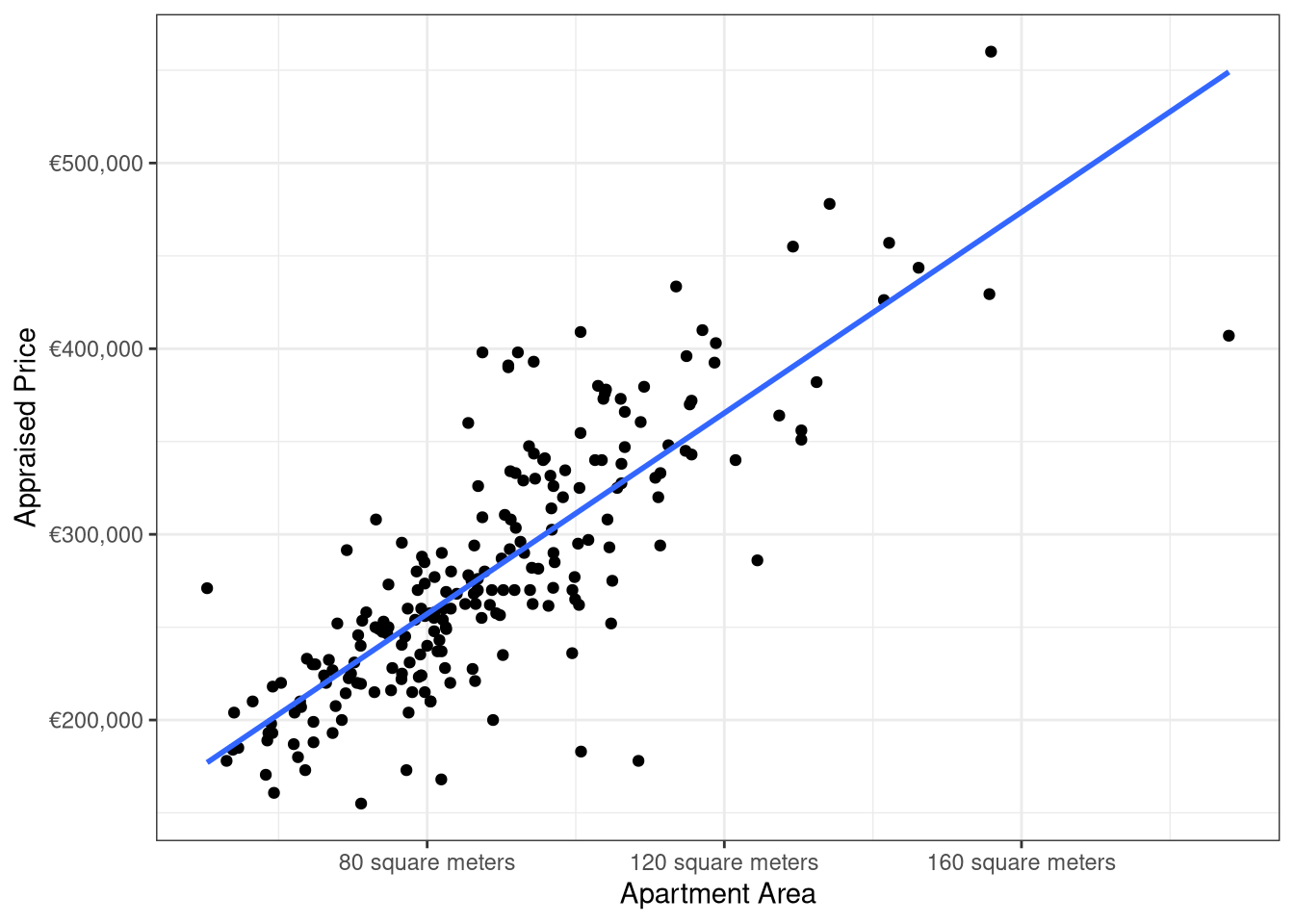

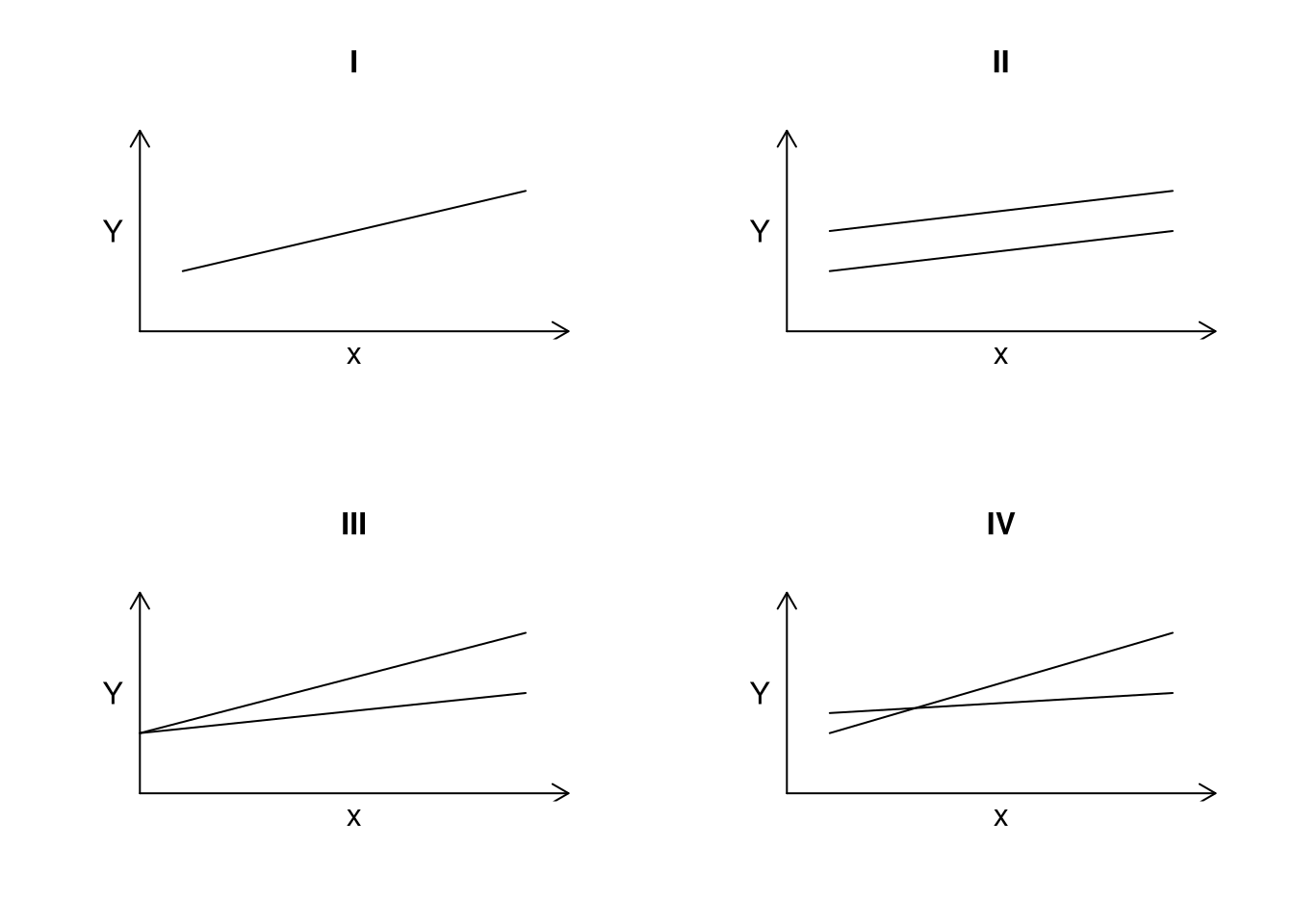

\[\begin{equation*} \widehat{Y}_i = 40,822 + 2,705 x_{i1} \end{equation*}\]and a scatterplot of totalprice versus area with the fitted regression line superimposed over the scatterplot is shown in Figure 2.

Based on Figure 2, there appears to be a linear relationship between appraised price and living area. Further, this relationship is statistically significant, as the p-value for testing \(H_0: \beta_1=0\) versus \(H_1: \beta_1 \neq 0\) is less than \(2 \times 10^{-16}\).

Solution (does adding a dummy variable (elevator) change the relationship?):

The regression model including the dummy variable for elevator is written \[\begin{equation}

Y = \beta_0 + \beta_1 x_1 + \beta_2 D_1 + \beta_3 x_1 D_1 + \varepsilon

\end{equation}\] where \[\begin{equation*}

D_1 =

\begin{cases}

0 &\text{when a building has no elevators}\\

1 &\text{when a building has at least one elevator.}

\end{cases}

\end{equation*}\]

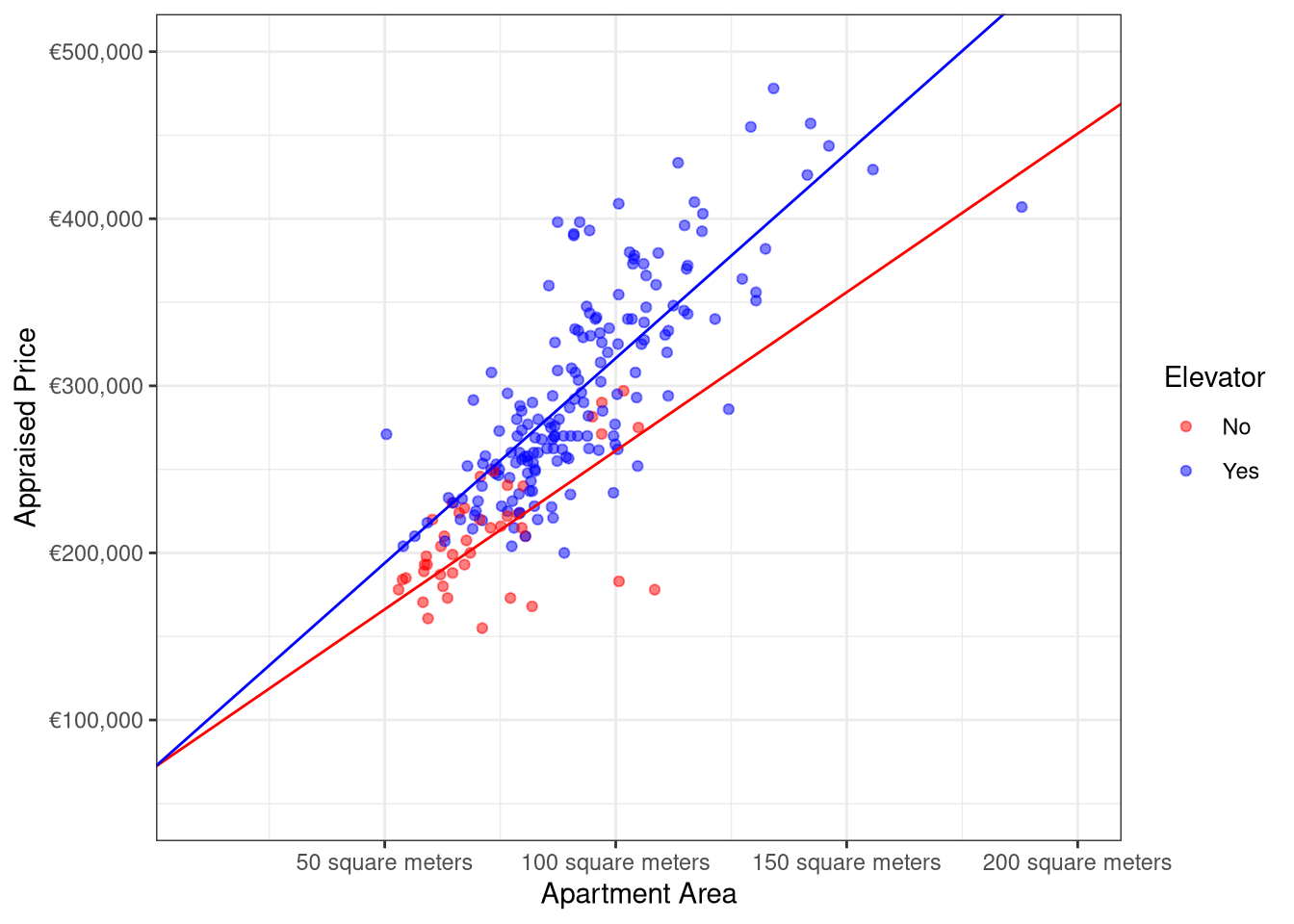

To determine if the lines are the same (which means that the linear relationship between appraised price and living area is the same for apartments with and without elevators), the hypotheses are

\[\begin{equation*} H_0: \beta_2 = \beta_3 = 0 \text{ versus } H_1: \text{at least one } \beta_i \neq 0 \text{ for } i=2, 3. \end{equation*}\]In this problem, one may conclude that at least one of \(\beta_2\) and \(\beta_3\) is not zero since the p-value = \(9.4780144\times 10^{-9}\). In other words, the lines have either different intercepts, different slopes, or different intercepts and slopes.

To see if the lines have the same slopes (which means that the presence of an elevator adds constant value over all possible living areas), the hypotheses are \[\begin{equation*} H_0: \beta_3 = 0 \text{ versus } H_1: \beta_3 \neq 0. \end{equation*}\]

Based on the p-value = \(0.0043797\), it may be concluded that \(\beta_3 \neq 0\), which implies that the lines are not parallel.

To test for equal intercepts (which means that appraised price with and without elevators starts at the same value), the hypotheses to be evaluated are \[\begin{equation*} H_0: \beta_2 = 0 \text{ versus }H_1: \beta_2 \neq 0. \end{equation*}\]

Since the p-value for testing the null hypothesis is 0.1132635, one fails to reject \(H_0\) and should conclude that the two lines have the same intercept but different slopes.

The fitted model is \(\widehat{Y}_i = 71,352 + 1,898x_{i1} + 554x_{i1}D_{i1}\), and the fitted regression lines for the two values of \(D_1\) are shown in Figure 3. The fitted model using the same intercept with different slopes has an \(R^2_a\) of 0.7033949, a modest improvement over the model without the variable elevator, which had an \(R^2_a\) value of 0.6532269.

Code

ggplot(data = VIT2005, aes(x = area, y = totalprice, color = elevator)) +

geom_point(alpha = 0.5) +

theme_bw() +

geom_abline(intercept = b0,

slope = b1NO,

color = "red") +

geom_abline(intercept = b0,

slope = b1YES,

color = "blue") +

scale_color_manual(values = c("red", "blue")) +

labs(x = "Apartment Area",

y = "Appraised Price",

color = "Elevator") +

scale_y_continuous(labels = label_currency(prefix = "€"),

limits = c(50000, 500000)) +

scale_x_continuous(labels = label_number(suffix = " square meters"),

limits = c(10, 200))

mod_interDiagnostics

Example

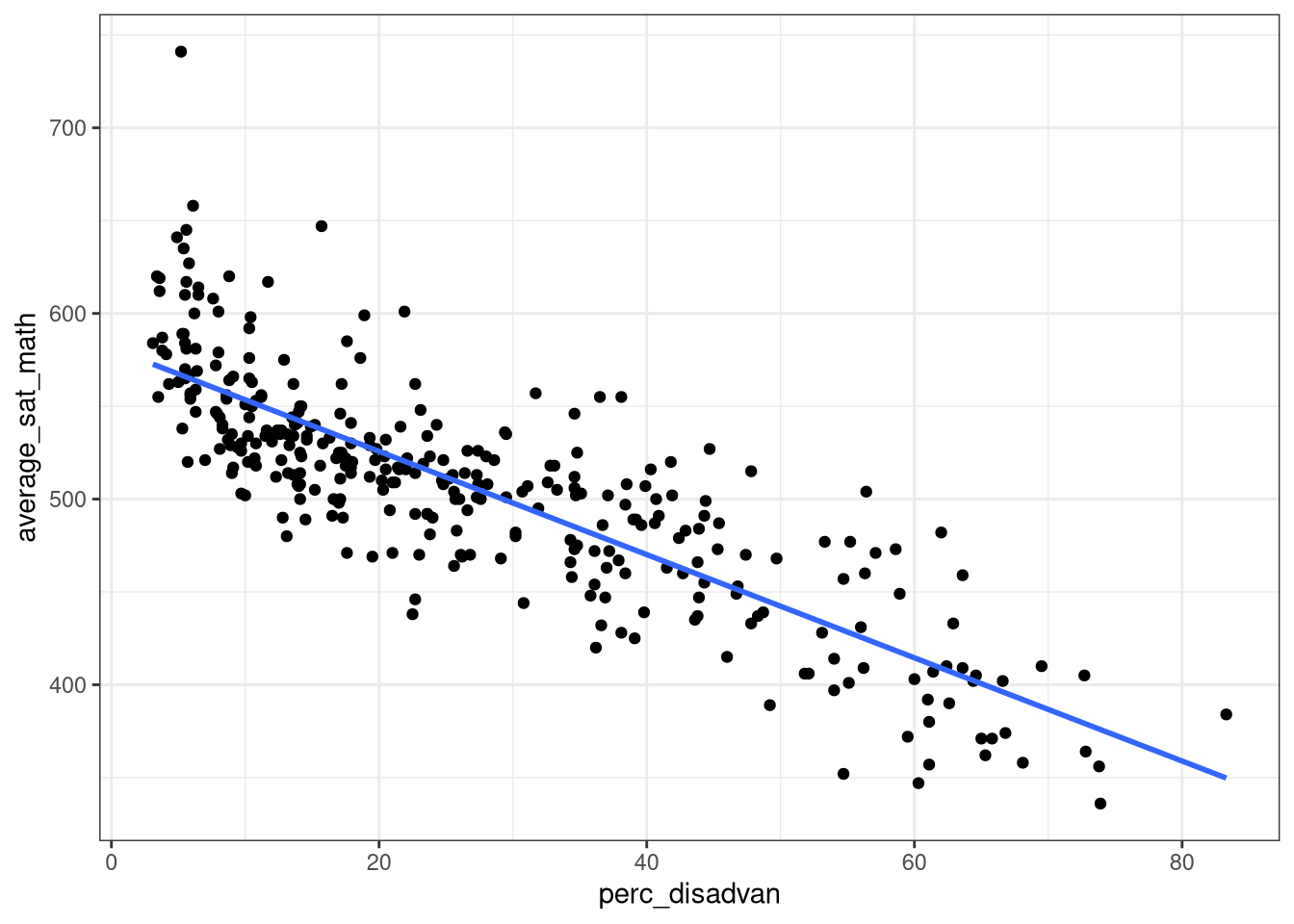

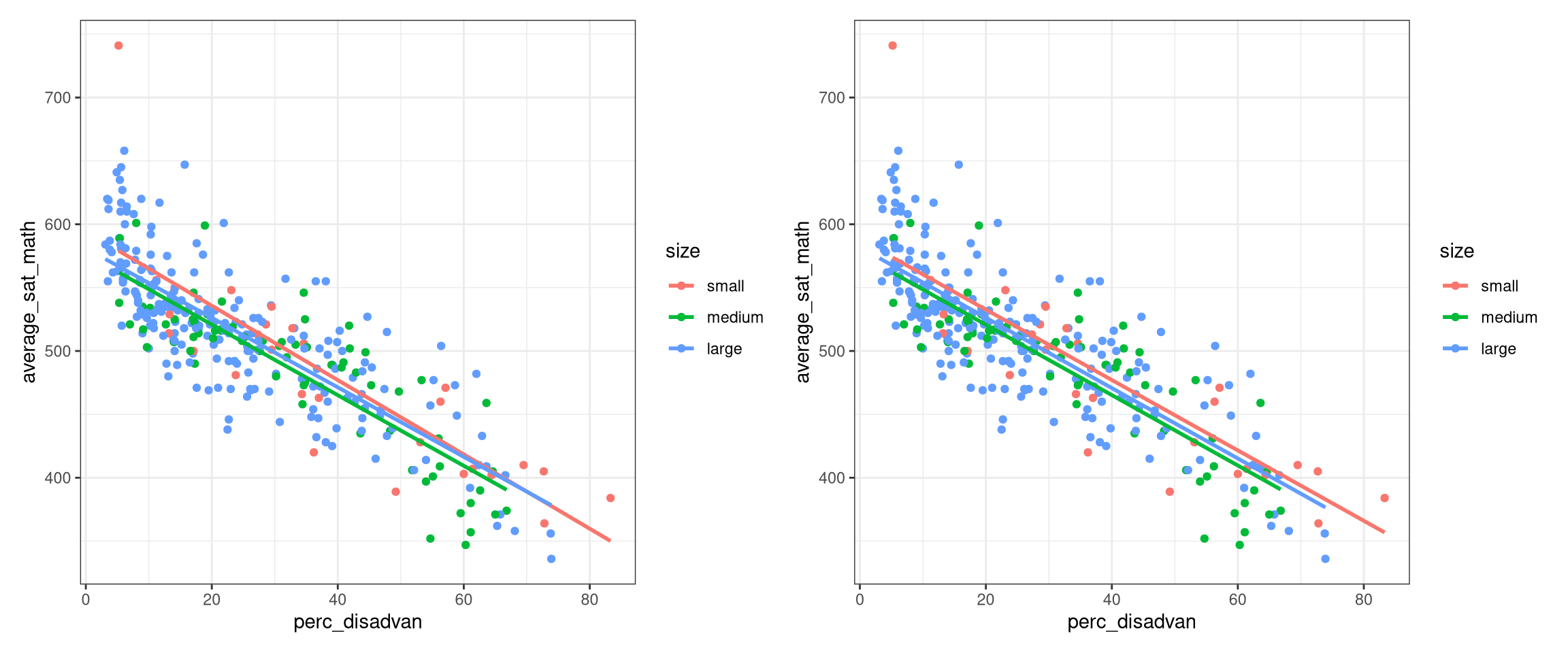

Consider the MA_schools data frame from the moderndive package which contains 2017 data on Massachusetts public high schools provided by the Massachusetts Department of Education. Consider a model with SAT math scores (average_sat_math) modeled as a function of percentage of the high school’s student body that are economically disadvantaged (perc_disadvan) and the a categorical variable measuring school size (size).

Solution (is there a relationship between average_sat_math and perc_disadvan?):

You complete the rest….